Microprinter

Software / Algorithm

CAD

Mechatronics

Human interaction

The Final product - Microprinter

MicroPrinter uses mechatronics, electronics, and computing aspects to recreate the printing experience from hand to paper. It is a handwritten text recognising machine, which searches for an image, and prints it. Thus creating a meaningful human interaction as well as a physical output for the user.

The printed outcome - Souvenir for the user (printed image of their word search)

Stage 1

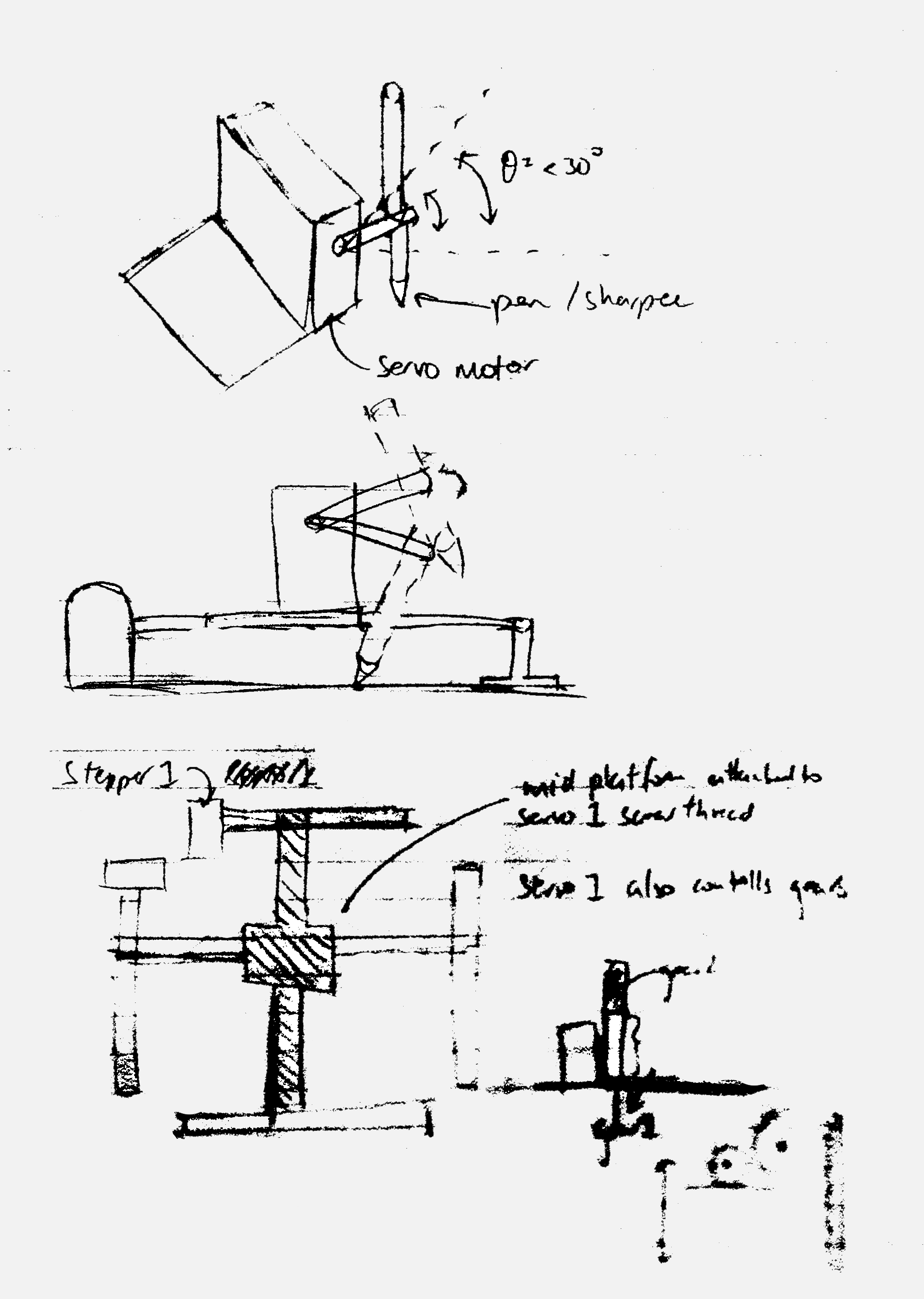

Ideation, concept generation, and idea feasibility consideration. This was done using sketches mainly to try to visualise the possible ideas and solutions.

The salvaged mini CD stepper motor

The first generation prototype (Looks like)

Stage 1 input (unprocessed image from pi camera)

Stage 2 input (processed image)

An example google images photo (singing cat)

Stage 4

The Full build:

The concept behind the build is to have 3 layers, showing interaction from somebody inserting a piece of paper on the bottom layer, removing it after Microprinter has processed the word, and placing it on the top platform, ready for printing.

Top platform - Blue paper in position to print

Minimal wiring on top level to provide cleaner outlook

Total interaction time:

Up to 7 minutes for densely inked images

Total cost:

£150

Click here to access the full documentation and description of Microprinter!

And Click here to access the Github full python code of Microprinter!

Stage 2

Taking apart and salvaging material component parts from existing parts to see if they can be utilised in the possible ideas generated.

Taking apart old CD/DVD writer machines to use parts such as LEDs, Buttons, stepper motors, Guide rails.

Stage 3

Coding different processes for the interaction:

Handwritten text capture and processing

This stage uses a pi-camera to capture a photo of the paper with the word written on top. This photo is then adjusted so that its brightness is increased, threshold added, and colour bands removed. This then provides a single word image.

Machine learning text recognition

Here the image of the word is recognised using code incorporating Tensorflow to recognise the word such as ‘bird’, or even phrases such as ‘singing cat’

Google images search for the photo and processing into printable matrix

The word is then searched online and the first image which can be downloaded without copyright restrictions is taken and processed using python image processing code (Beautifulsoup + PIL) into unassigned 8-bit integers per pixel which can then be manipulated into a greyscale matrix, read by a boolean function.

Coding the stepper motors to move together in rows to produce a printer

Here the stepper motors will be moving along the row, down one pixel and then the reverse way back along the row, then one pixel down and so forth. At the same time, there is a servo motor which moves 30 degrees allowing a pen to make a dot on the provided piece of paper.

The image before printing - after stage 4 code process

The wiring of all components onto one Raspberry Pi